If you’re shopping for a new TV, gaming console, or home theater gear, you’ve likely come across HDMI 2.0 and HDMI 2.1 labels. While both are common, HDMI 2.1 is the newer standard—and it brings significant upgrades. Let’s break down the key differences.

Core Differences at a Glance

|

Feature

|

HDMI 2.0

|

HDMI 2.1

|

|

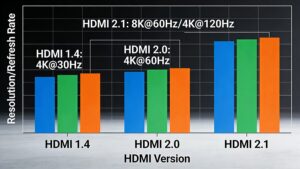

Bandwidth

|

Up to 18 Gbps

|

Up to 48 Gbps

|

|

Max Resolution

|

4K at 60Hz

|

8K at 60Hz; 4K at 120Hz

|

|

Variable Refresh Rate (VRR)

|

No

|

Yes (reduces screen tearing)

|

|

eARC

|

Standard ARC only

|

Enhanced ARC (higher audio quality)

|

Why It Matters

- Gamers: HDMI 2.1’s 4K@120Hz and VRR deliver smoother, more responsive gameplay—critical for fast-paced titles.

- Home Theater Fans: 8K support and eARC (for lossless audio like Dolby Atmos) make 2.1 ideal for high-end setups.

- Everyday Users: HDMI 2.0 still works for 4K@60Hz streaming (Netflix, Disney+), so no need to upgrade unless you want premium features.

Should You Upgrade?

If you own a current-gen console (PS5, Xbox Series X), an 8K TV, or a high-refresh-rate gaming monitor, HDMI 2.1 is worth it. For basic 4K streaming, HDMI 2.0 suffices. Keep in mind that to take advantage of HDMI 2.1 features, all your devices (TV, console, cable) need to support the standard.